Robotic Car | Seminar Report for B.Tech Mechanical Students

Robotic Car | Seminar Report for B.Tech Mechanical Students

We’re pretty familiar with autonomous cars around here, and we’ve even been treated to a ride in one of Stanford’s robots at their automotive innovation lab, which they launched in partnership with Volkswagen. You might also remember Shelley, their autonomous Audi TTS, which autonomously raced to the top of Pikes Peak last year.

Volkswagen’s thinking behind all of this high performance autonomous car stuff is that at some point, they’ll be able to program your car to be a far, far better driver than you could ever be, and it’ll have the ability to pull some crazy maneuvers to save you from potential accidents. Google, who’s just down the road from Stanford, seems to understand this, and they’ve turned their autonomous cars up to “aggressive” in this driving demo that they gave to some lucky sods in a parking lot at the TED conference in Long Beach. It’s pretty impressive:

This might seem dangerous, but arguably, this demo is likely safer than a human driving around the parking area at normal speeds, if we assume that the car’s sensors are all switched on and it’s not just playing back a preset path. The fact is that a car equipped with radar and LIDAR and such can take in much more information, process it much more quickly and reliably, make a correct decision about a complex situation, and then implement that decision far better than a human can.

This is especially true if we consider the type of research that is being done with Shelley to teach cars how to make extreme maneuvers, safely. So why aren’t we all driving autonomous cars already? It’s not a technical ; there are several cars on the road right now with lane sensing, blind spot detection and adaptive cruise control, which could be combined to allow for autonomous highway driving. Largely, the reasons seem to be legal: there’s no real framework or precedent for yielding control of a vehicle to an autonomous system, and nobody knows exactly who to blame or sue if something goes wrong.

And furthermore, the first time something does go wrong, it’s going to be like a baseball bat to the face of the entire robotics industry. Anyway, enough of the depressing stuff, here’s an outside view of Google’s robot car squealing around that parking lot: For what it’s worth, “aggressive” is apparently one of four different driving personalities that you have the option of choosing from every time to start up one of their robot cars.

Lidar (Light Detection And Ranging) :

LIDAR (Light Detection And Ranging also LADAR) is an optical remote sensing technology that can measure the distance to, or other properties of a target by illuminating the target with light,often using pulses from a laser. LIDAR technology has application in geomatics, archaeology, geography, geology, geomorphology, seismology, forestry, remote sensing and atmospheric physics, as well as in airborne laser swath mapping (ALSM), laser altimetry and LIDAR Contour Mapping. The acronym LADAR (Laser Detection and Ranging) is often used in military contexts.

The term “laser radar” is sometimes used even though LIDAR does not employ microwaves or radio waves and is not therefore in reality related to radar. LIDAR uses ultraviolet, visible, or near infrared light to image objects and can be used with a wide range of targets, including non-metallic objects, rocks, rain, chemical compounds, aerosols, clouds and even single molecules. A narrow laser beam can be used to map physical features with very high resolution. LIDAR has been used extensively for atmospheric research and meteorology. Downward-looking LIDAR instruments fitted to aircraft and satellites are used for surveying and mapping. A recent example being the NASA Experimental Advanced Research Lidar. In addition LIDAR has been identified by NASA as a key technology for enabling autonomous precision safe landing of future robotic and crewed lunar landing vehicles. Wavelengths in a range from about 10 micrometers to the UV (ca.250 nm) are used to suit the target. Typically light is reflected via backscattering

Google Street View

Google Street View is a technology featured in Google Maps and Google Earth that provides panoramic views from various positions along many streets in the world. It was launched on May 25, 2007, originally only in several cities in the United States, and has since gradually expanded to include more cities and rural areas worldwide. Google Street View displays images taken from a fleet of specially adapted cars. Areas not accessible by car, like pedestrian areas, narrow streets, alleys and ski resorts, are sometimes covered by Google Trikes (tricycles) or a snowmobile. On each of these vehicles there are nine directional cameras for 360° views at a height of about 8.2 feet, or 2.5 meters, GPS units for positioning and three laser range scanners for the measuring of up to 50 meters 180° in the front of the vehicle.

There are also 3G/GSM/Wi-Fi antennas for scanning 3G/GSM and Wi-Fi hotspots. Recently, ‘high quality’ images are based on open source hardware cameras from Elphel. Where available, street view images appear after zooming in beyond the highest zooming level in maps and satellite images, and also by dragging a “pegman” icon onto a location on a map. Using the keyboard or mouse the horizontal and vertical viewing direction and the zoom level can be selected. A solid or broken line in the photo shows the approximate path followed by the camera car, and arrows link to the next photo in each direction. At junctions and crossings of camera car routes, more arrows are shown.

Interactive algorithms for path following involve direct communication with external sources such as receiving navigation data from the leader or consulting GPS coordinates. The Follow-the-Past algorithm is one such example; it involves receiving and interpreting position data, orientation data, and steering angle data from a leader vehicle]. The objective is to mimic these three navigational properties in order to accurately follow the path set by the leader.

As orientation and steering angle are associated with GPS positional data, the following vehicle can update its navigational state to match that of the leader vehicle at the appropriate moment in time. One developed algorithm is best described as a placing a trail of breadcrumbs based on the leading vehicle’s position . A cubic spline fit is applied to the generated breadcrumbs to establish a smooth path by which to travel. This developed algorithm was tested and showed centimeter-level precision in following a desired path.

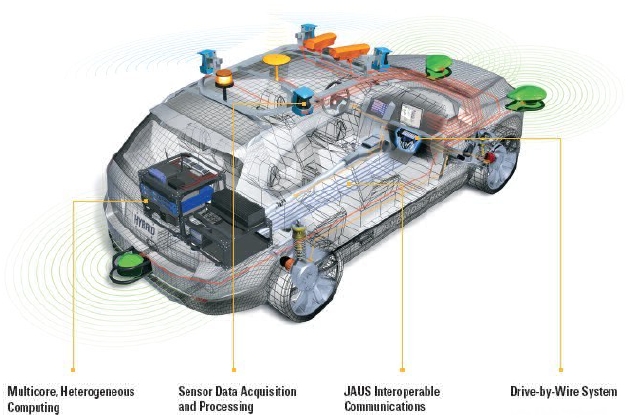

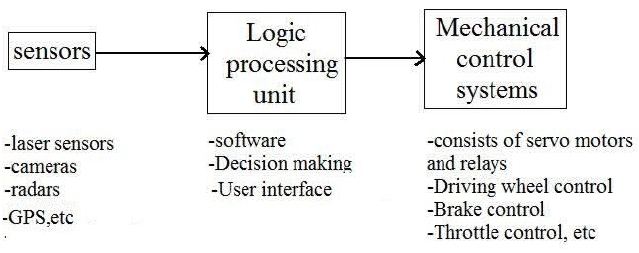

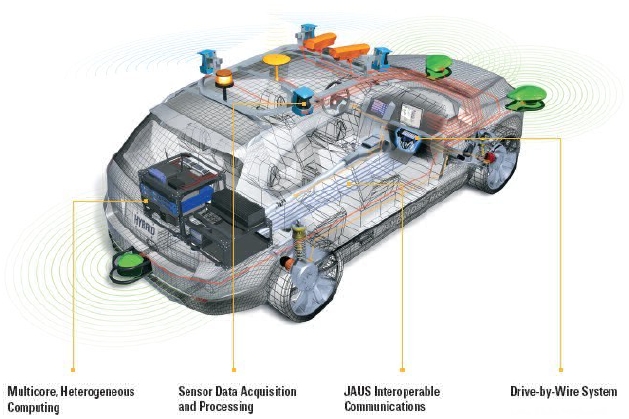

Sensor data Acquisition

Sensor data acquisition and processing Lab VIEW applications running on multicore servers with Linux and Windows OSs processed and analyzed data from three IBEO ALASCA multiplanar LIDARs, four SICK LMS LIDARs, two IEEE 1394 cameras, and one Nov Atel GPS/INS. Ethernet cables acted as the interface for all sensors. sensor data acquisition means gathering of the data that the sensors are providing which collected from the current environment, then the collected data is processed here.

Cameras

Google has used three types of car-mounted cameras in the past to take Street View photographs. Generations 1–3 were used to take photographs in the United States. The first generation was quickly superseded and images were replaced with images taken with 2nd and 3rdgeneration cameras. Second generation cameras were used to take photographs in Australia. The shadows caused by the 1st, 2nd and 4th generation cameras are occasionally viewable in images taken in mornings and evenings. The new 4th generation cameras will be used to completely replace all images taken with earlier generation cameras. 4th generation cameras take near-HD images and deliver much better quality than earlier cameras.

GPS

The Global Positioning System (GPS) is a space-based global navigation satellite system(GNSS) that provides location and time information in all weather, anywhere on or near the Earth, where there is an unobstructed line of sight to four or more GPS satellites.GPS receiver calculates its position by precisely timing the signals sent by GPS satellites high above the Earth. Each satellite continually transmits messages that include• the time the message was transmitted• precise orbital information (the ephemeris)• the general system health and rough orbits of all GPS satellites (the almanac).The receiver uses the messages it receives to determine the transit time of each message and computes the distance to each satellite. These distances along with the satellites’ locations are used with the possible aid of trilateration, depending on which algorithm is used, to compute the position of the receiver.

Conclusion

What are the ethical positions of potential buyers, manufacturers and service providers? Would a buyer ignore the ethical twists of autonomous car control systems, in a world in which equity investments with tobacco or slave labour content are shunned by some? What would be the main ethical purchase/hire decision points?

With the Value Stream concept as studied by Womack and Jones 2003, even the Inevitable Collision State is not a bad thing when it occurs. A car shall make measurements and report to insurance, law enforcement and other authorities. Other value streams shall instantly sprout from thereon, those of the insurance, judiciary/litigation, law enforcement, medivac, news reporting(mass media), etc. The car then autonomously drives away from the scene of accident, if it is possible.

REFERENCES

• IEEE Spectrum Posted BY: Evan Ackerman / Fri, March 04, 2011

•Google Street View: Capturing the World at Street Level; Dragomir Anguelov, Carole Dulong, Daniel Fillip, Christian Frueh, Stéphane Lafon, Richard Lyon, Abhijit Ogale, LucVincent, and Josh Weaver, Google

• Modelling and identification of passenger car dynamics using robotics formalism GentianeVenture (IEEE member), Pierre-Jean Ripert, Wisama Khalil (IEEE Senior Member),Maxime Gautier,Philippe Bodson